Description

Answering complex questions over knowledge bases (KB-QA) faces huge input data with billions of facts, involving millions of entities and thousands of predicates. For efficiency, QA systems first reduce the answer search space by identifying a set of facts that is likely to contain all answers and relevant cues. The most common technique or doing this is to apply named entity disambiguation (NED) systems to the question, and retrieve KB facts for the disambiguated entities.This work presents CLOCQ, an efficient method that prunes irrelevant parts of the search space using KB-aware signals. CLOCQ uses a top-k query processor over score-ordered lists of KB-items that combine signals about lexical matching, relevance to the question, coherence among candidate items, and connectivity in the KB graph. Experiments with two recent QA benchmarks for complex questions demonstrate the superiority of CLOCQ over state-of-the-art baselines with respect to answer presence, size of the search space, and runtimes.

GitHub link to CLOCQ code Directly download CLOCQ code

Overview

For search space reduction, CLOCQ takes as input all facts in the KB and the question, and retrieves a set of candidate KB-items for each question word. These KB-items are scored making use of global signals (connectivity in KB-graph, semantic coherence), and local signals (question relatedness, term-matching), and the top-k KB items for each question word are detected. Since the choice of k is not straightforward, CLOCQ provides a mechanism to set k automatically, for each individual question word. For the question "who scored in the 2018 final between france and croatia?", "2018 final" is more ambiguous than "scored", and therefore CLOCQ would consider more KB-items to account for potential errors.Finally, salient facts with disambiguated KB-items are retrieved and can be passed to a QA system as the search space.

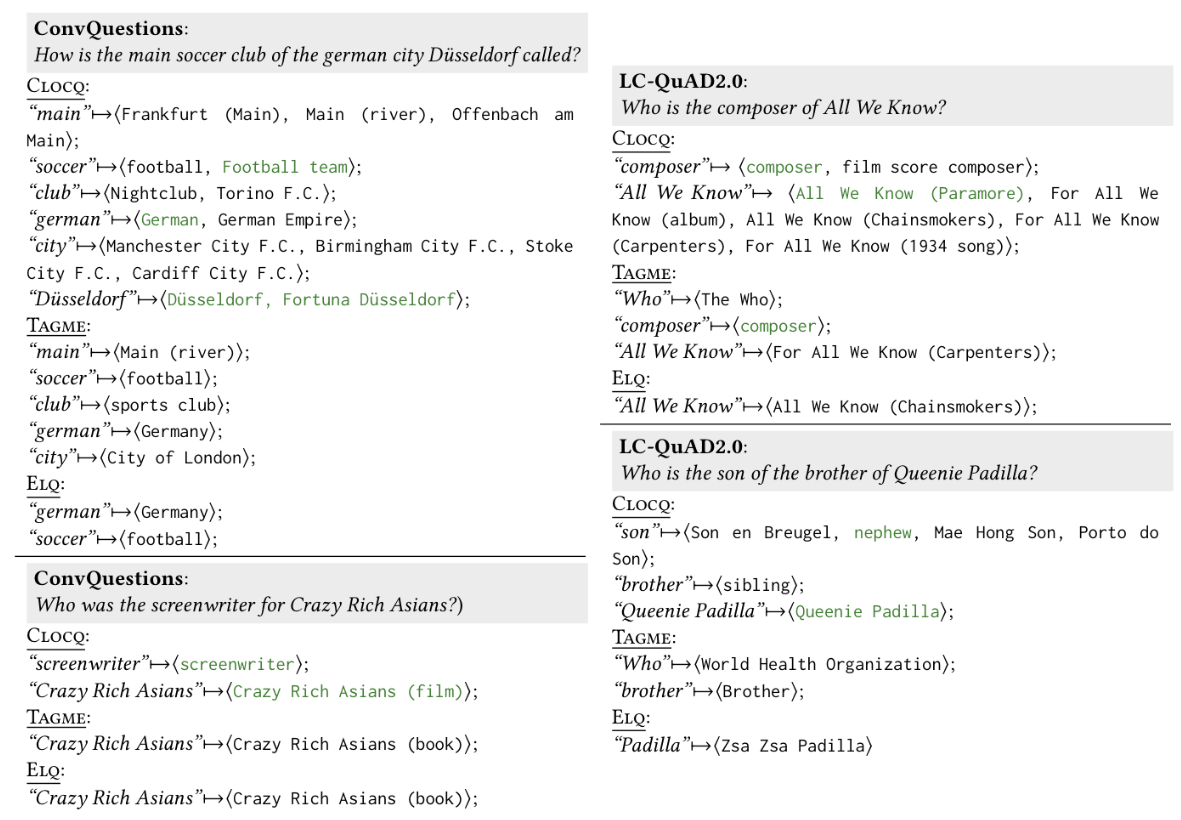

Some example disambiguations of CLOCQ (and baselines) can be found here:

Correct disambiguations are colored in green. The examples illustrate how CLOCQ can adjust the parameter k dynamically and allow for disambiguation errors in case of very ambiguous question words (e.g. for "All We Know", or "son"). In the first example, even though CLOCQ maps some question words to incorrect KB-items, the robustness of CLOCQ helps to identify the important KB-items for answering the question (Football team, Düsseldorf, and Fortuna Düsseldorf).

For additional details, please refer to the paper.

Contact

For feedback and clarifications, please contact: Philipp Christmann (pchristm AT mpi HYPHEN inf DOT mpg DOT de), Rishiraj Saha Roy (rishiraj AT mpi HYPHEN inf DOT mpg DOT de) or Gerhard Weikum (weikum AT mpi HYPHEN inf DOT mpg DOT de).To know more about our group, please visit https://www.mpi-inf.mpg.de/departments/databases-and-information-systems/research/question-answering/.

Related Papers

"Beyond NED: Fast and Effective Search Space Reduction for Complex Question Answering over Knowledge Bases", Philipp Christmann, Rishiraj Saha Roy, and Gerhard Weikum. In WSDM '22, Phoenix, Arizona, 21 - 25 February 2022.[Extended version] [Code] [Poster] [Slides] [Video] [Extended Video]

"CLOCQ: A Toolkit for Fast and Easy Access to Knowledge Bases", Philipp Christmann, Rishiraj Saha Roy, and Gerhard Weikum. In BTW '23, Dresden, Germany, 6 - 10 March 2023.

[Code] [Poster] [Slides]

"Question Entity and Relation Linking to Knowledge Bases via CLOCQ", Philipp Christmann, Rishiraj Saha Roy, and Gerhard Weikum. In SMART@ISWC '22, Hangzhou, China, 27 October, 2022.

[Code] [Slides] [Video]

API

We added entity and relation linking functionalities to the CLOCQ API. Try it out! Get started with the CLOCQ API API usage: 109,340,164 requestsRetrieve search space (Wikidata facts) for question.

Method proposed for the SMART 2022 Task.

Method proposed for the SMART 2022 Task.